Synergy

Synergy is one of those words that caught fire with the general public in the late 20th century, especially in tech-related fields. In general, it is taken to mean the interaction of two or more things (organizations, substances, products, fields, etc.) that produces a greater effect when combined than separately. For example, if two colleges work jointly on a project, or the way there was cooperation between some pharmaceutical researchers in developing the COVID-19 vaccines.

But the word synergy is not a recent addition to the language. It appeared in the mid 19th century mostly in the field of physiology concerning the interaction of organs. It comes from the Greek sunergos meaning "working together" which comes from sun- ‘together’ + ergon ‘work’.

It has been used in diverse ways. In Christian theology, it was said that salvation involves synergy between divine grace and human freedom. I received a wedding engagement announcement that talked about the synergy between the two people. (They do both work in tech fields.)

The informational synergies which can be applied also in media involve a compression of transmission, access and use of information’s time, the flows, circuits and means of handling information being based on a complementary, integrated, transparent and coordinated use of knowledge.[32]

Walt Disney is given as an example of pioneering synergistic marketing. Back in the 1930s, the company licensed dozens of firms the right to use the Mickey Mouse character in products and ads. These products helped advertise their films. This kind of marketing is still used in media. For example, Marvel films are not only promoted by the company and the film distributors but also through licensed toys, games and posters.

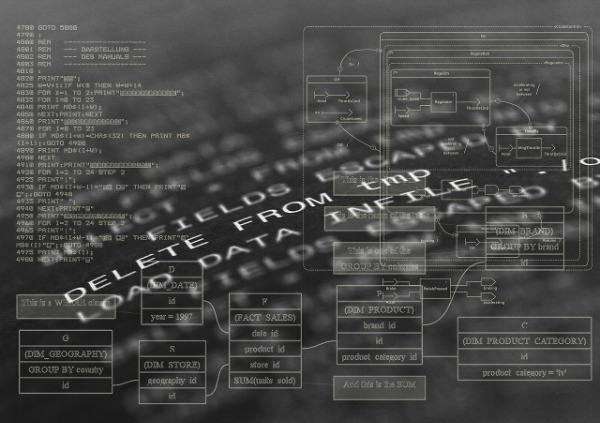

Shifting to tech, synergy can also be defined as the combination of human strengths and computer strengths. The use of robots and AI are clear synergies. If you read into information theory, you will find discussions of synergy when multiple sources of information taken together provide more information than the sum of the information provided by each source alone.

In education, synergy can be when schools and colleges, departments, disciplines, researchers,

What did more than a million people do this past Sunday night at 9pm ET? They tuned in on their mobile devices to

What did more than a million people do this past Sunday night at 9pm ET? They tuned in on their mobile devices to