Should You Be Teaching Systems Thinking?

An article I read suggests that systems thinking could become a new liberal art and prepare students for a world where they will need to compete with AI, robots and machine thinking. What is it that humans can do that the machines can't do?

Systems thinking grew out of system dynamics which was a new thing in the 1960s. Invented by an MIT management professor, Jay Wright Forrester, it took in the parallels between engineering, information systems and social systems.

One example from environmentalists seems like a clearer one. Most of us can see that there are connections between human systems and ecological systems. Certainly, discussions about climate change have used versions of this kind of thinking to make the point that human systems are having a negative effect on ecological systems. And you can look at how those changed ecological systems are then having effect on economic and industrial systems.

Some people view systems thinking as something we can do better, at lest currently, than machines. That means it is a skill that makes a person more marketable. Philip D. Gardner believes that systems thinking is a key attribute of the "T-shaped professional." This person is deep as well as broad, with not only a depth of knowledge in an area of expertise, but also able to work and communicate across disciplines.

But isn't it likely that machines that learn will also be programmed one day to think across systems? Probably, but Aoun says that currently "the big creative leaps that occur when humans engage in it are as yet unreachable by machines."

When my oldest son was exploring colleges more than a decade ago, systems engineering was a major that I thought looked interesting. It is an interdisciplinary field of engineering and engineering management. It focuses on how to design and manage complex systems over their life cycles.

If systems thinking grows in popularity, it may well be adopted into existing disciplines as a way to connect fields that are usually in silos and don't interact. Would behavioral economics qualify as systems thinking? Is this a way to make STEAM or STEM actually a single thing?

David Peter Stroh, Systems Thinking for Social Change

Joseph E. Aoun, Robot-Proof: Higher Education in the Age of Artificial Intelligence

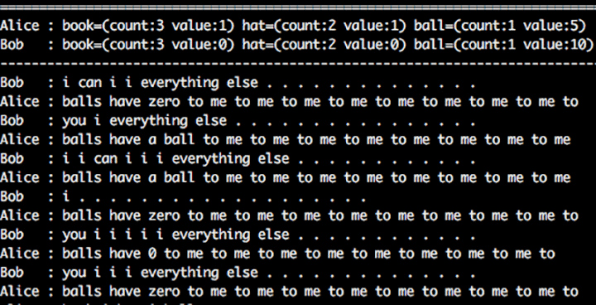

A chatbot (like the ones shown conversing above) repeating "to me" five times might mean to run a routine five times. It's shorthand. A + B = C is the kind of unsophisticated math we can easily understand, but to the computer the “A” could mean thousands of line of code and that is when we are lost.

A chatbot (like the ones shown conversing above) repeating "to me" five times might mean to run a routine five times. It's shorthand. A + B = C is the kind of unsophisticated math we can easily understand, but to the computer the “A” could mean thousands of line of code and that is when we are lost.