K-12 teachers get training on how to teach in education courses. It's something that separates them from higher education teachers. When I moved from K-12 to higher ed, part of my job was to work with professors on curriculum design and "pedagogy." I was actually hesitant to take that job because I thought that I would not find my suggestions welcomed by all the professors.

I was pleasantly surprised. It's not that every one of the 400 or so professors wanted my help. I couldn't have worked with that many people in any case. But the people who came to me did want help. And what I heard on a number of occasions was "I never was taught how to teach" and "I just try to copy the good teachers I had and not the bad ones." I recall a workshop when we were talking about

Bloom's taxonomy. There were about a dozen professors in the session and none had ever heard of Bloom or his taxonomy. They were very interested. We discussed (actually, argued about) knowledge versus comprehension so long that we never made it to the higher order thinking skills.

Everyone who teaches has learned how to teach from the good and bad teachers they experienced as a student. It's not the only training required, but it's there.

I was thinking about this because I went to a bookshelf at home full of teaching books I read in my "formative years" (high school and as an undergrad) and realized that some of those books had a pretty big impact on my desire to be a teacher and how I would teach.

They were not books on pedagogy. They were stories by teachers about teaching. They made teaching seem real to me and I carried them into the educational psychology and foundations courses that were full of theories that made little sense in comparison to what I was finding in my field experiences.

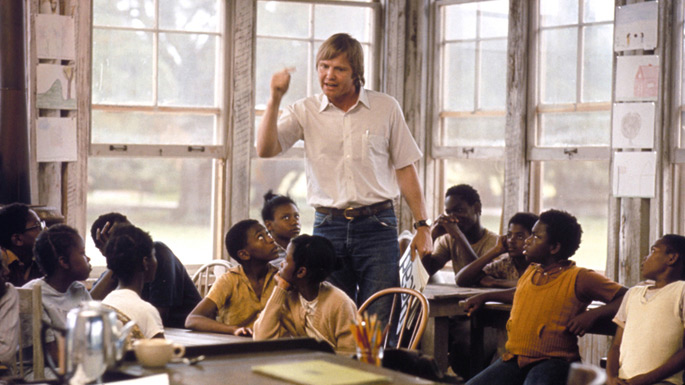

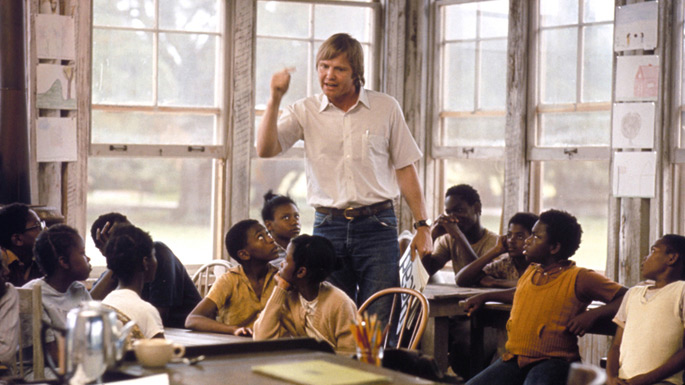

The first Pat Conroy book I ever read was

The Water Is Wide

which I bought while I was in college preparing to become an English teacher. (It wasn't on any course reading lists.) It seemed like a good choice. It is a 1972 autobiography based on his work as a teacher on Daufuskie Island (called Yamacraw Island in the book) off South Carolina. I also saw the film adaptation, titled

Conrack

, before I graduated. (There was also a TV movie version of

The Water Is Wide

in 2006.)

It is a poor, run-down island which has no bridges and little infrastructure. He has trouble even literally communicating with the islanders, who are nearly all

Gullah who were directly descended from slaves and who had little contact with the mainland or its people. I couldn't imagine that I would ever teach in a situation like Conroy, but his struggles to find ways to reach his students, aged ten to thirteen, came back to me a number of times when I was teaching students in a suburban middle school years later.

Conroy (called "Conrack" by most of the students) also battled the principal and the administrators of the district because of his "unconventional" teaching methods. Luckily, my battles were minor compared with Conrack's. Maybe I was more conventional. But, I learned from his story something about which battles were worth fighting.

I know I saw the film

To Sir, With Love before I read the book. Say what you will about that pop classic, what I took away from it was that some teachers that really cared about what they taught could make a difference. Of course, when I saw it, I was 15 and had an English teacher that I thought was the greatest teacher ever. Big influence.

My first year teaching, I taught the book

To Sir with Love

to ninth graders. Tough book for ninth graders to read but it worked. I made them call me "sir" while we read it. We did some of the lessons in the book. We learned about British English and the differences in schools here and there. We watched the film. I tried to explain

mods and rockers,

Lulu and

The Mindbenders - and I couldn't just click on Wikipedia to do it. And we talked a lot about what made a good teacher.

I looking at the books on the shelf and as I paged through

Up the Down Staircase

Up the Down Staircase

and

The Way It Spozed to Be, I realized that none of the books were anything like the actually teaching experiences I ended up having in my 35 years in classrooms.

In

The Way It Spozed to Be

(from 1968), James Herndon writes about his first year teaching, in a poor, segregated junior high school in urban California. I thought his ideas for teaching reading in a poorly equipped school were innovative. He gets fired at the end of the year for poor classroom management. I read that one the summer before I started my first year teaching.

Luckily, I also read Herndon's second book,

How to Survive in Your Native Land

, that summer. That one is about his next decade teaching and he's successful and Vonnegut-funny about it.

It's important for all teachers to give some thought about the where, when, who and how of their teacher training.

I know I saw the film

I know I saw the film